The incredible amount of data on the Internet is a rich resource for any field of research or personal interest. To effectively harvest that data, you’ll need to become skilled at web scraping.The Python libraries requests and Beautiful Soup are powerful tools for the job. If you like to learn with hands-on examples and you have a basic understanding of Python and HTML, then this tutorial is.

- Beautiful Soup Python

- Simple Web Scraping With Python Tutorial

- Web Scraping Using Python Code

- Web Scraping With Python 2nd

- Simple Web Scraping Using Python

The situation: I wanted to extract chemical identifiers of a set of ~350 chemicals offered by a vendor to compare it to another list. Unfortunately, there is no catalog that neatly tabulates this information, but there is a product catalog pdf that has the list of product numbers. The detailed information of each product (including the chemical identifier) can be found in the vendor’s website like this: vendor.com/product/[product_no]. Let me show you how to solve this problem with bash and Python.

Loading Web Pages with 'request' The requests module allows you to send HTTP requests using. New Book: 'Beyond the Boring Stuff with Python' You've read a beginner resource like Automate the Boring Stuff with Python or Python Crash Course, but still don't feel like a 'real' programmer? Beyond the Basic Stuff with Python covers software development tools and best practices so you can code like a professional.

Let’s break the problem down into steps:

- Extract list of product numbers (call it list A)

- Iterate over list A and webscrape chemical id to get a list (call it list B)

- Compare list B with desired list C

Steps 1 and 3 look easy – just some text manipulation. Step 2 is basically the automated version of going to the product webpage and copy-paste the chemical identifier, and repeat this ~350 times (yup, not going to do that).

Step 1

I have pdf catalogue that looks like this:

| Plate | Well | Product | Product No. |

|---|---|---|---|

| 1 | A1 | chemical x | 1111 |

| 1 | A2 | chemical y | 2222 |

| … | … | … | … |

Beautiful Soup Python

And of course, when copy-pasted to a text file, it is messed up…

Well, that is quite easy to fix. If we are sure that each table row becomes 4 lines, we can do some bash magic:

and we will get

But beware of empty cells! This may cause a table row to become fewer than 3 lines and mess up your data. This is why I choose paste in this case even though we could have just extracted every 4th line with $ sed -n '0~4p' temp. With a quick glance you can easily verify that the data is reformatted to look like the original table.

So, inspecting that the reformatted table looks fine, extract the product number, i.e. the 4th column:

Step 2

Simple Web Scraping With Python Tutorial

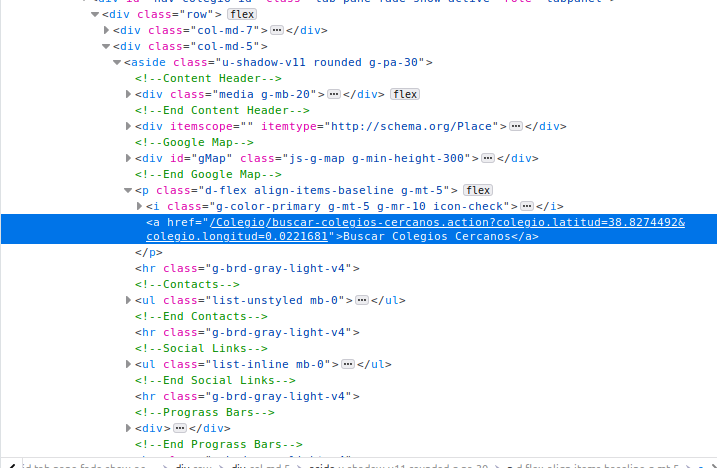

Let’s do a test by scraping from the webpage of one product. Go to the webpage in your browser and do “Inspect element” to inspect the HTML underneath. I found my chemical identifier nicely contained in a <div> tag which has the id inchiKey.

Make sure you have the packages requests and BeautifulSoup and run this Python script:

Web Scraping Using Python Code

Do you get the correct chemical identifier? If so, it’s time to wrap this in a loop that iterates over the list of product numbers:

Together with the chemical id, I printed out the product number again to ensure correspondence – some product numbers may be invalid and thus won’t yield the chemical id! This is guarding against that.

Web Scraping With Python 2nd

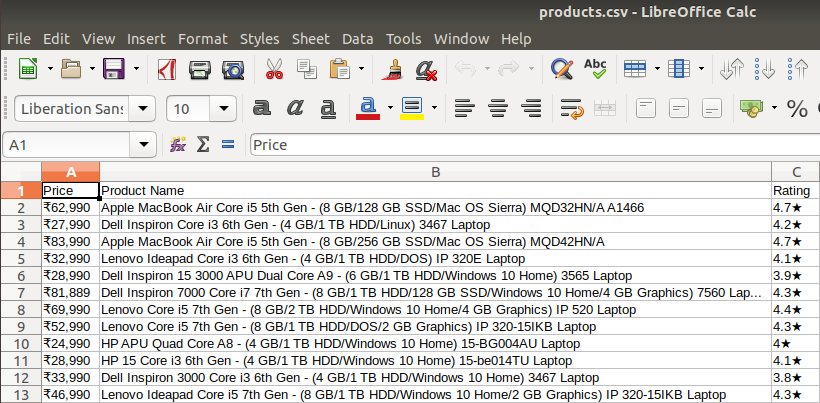

I get something like this as the output:

Notice the extraneous space and blank lines. Instead of trying to wrangle Python to output more consistently formatted output, I cleaned up with bash – it’s much easier:

You can confirm that each product number corresponds to a chemical identifier, then extracts just the identifiers like in Step 1:

Step 3

Easy:

comm outputs 3 columns: (1) C-B (2) B-C (3) C ∩ B. The flag -12 suppresses columns 1 and 2. You can similarly suppress the other columns to output what you need.

Bottom line

Simple Web Scraping Using Python

- Verify, verify your data at every step

- Freely switch bash and Python according to your needs