Web data extraction tool with an easy point-and-click interface for modern web Free and easy to use web scraping tool for everyone. With a simple point-and-click interface, the ability to extract. Mar 05, 2020 OutwitHub is a free web scraping tool which is a great option if you need to scrape some data from the web quickly. With its automation features, it browses automatically through a series of web pages and performs extraction tasks. The data scraping tool can export the data into numerous formats (JSON, XLSX, SQL, HTML, CSV, etc.).

Friday, January 22, 2021Merriam-webster defines 'research' as 'careful or diligent search; studious inquiry or examination; the collecting of information about a particular subject'. It's not easy to conduct academic research, so here we round up 30 tools that will facilitate your research, covering:

If you are also looking for data resources for your research, you may want to view our old post: 70 Amazing Free Data Resources You should know, covering government, crime, health, financial, social media, journalism, real estate...

10 Research Management Tools

1. MarginNote

License: Commercial

MarginNote is a powerful reading tool for learners. Whether you are a student, a teacher, a researcher, a lawyer or someone with a curious mind to learn, MarginNote can help you quickly organize, study and manage large volumes of PDFs and EPUBs. All in one learning app enables you to highlight PDF and EPUB, take note, create the mind map, review flashcards, and saves you from switching endlessly between different Apps. It is available on Mac, iPad, and iPhone.

https://marginnote.com/

2. Zotero

License: Free

Zotero is a free, easy-to-use tool to help you collect, organize, cite, and share research. It is available for Mac, Windows, and Linux. It supports managing bibliographic data and related research materials (such as PDF files). Notable features include web browser integration, online syncing, generation of in-text citations, footnotes, and bibliographies, as well as integration with the word processors Microsoft Word and LibreOffice Writer.

https://www.zotero.org

3. RefWorks

License: Free

RefWorks is a web-based commercial reference management software package. Users' reference databases are stored online, allowing them to be accessed and updated from any computer with an internet connection. Institutional licenses allow universities to subscribe to RefWorks on behalf of all their students, faculty and staff. Individual licenses are also available. The software enables linking from a user's RefWorks account to electronic editions of journals to which the institution's library subscribes.

https://www.refworks.com

4. EndNote

License: Commercial

EndNote is the industry standard software tool for publishing and managing bibliographies, citations and references on the Windows and Macintosh desktop. EndNote X9 is the reference management software that not only frees you from the tedious work of manually collecting and curating your research materials and formatting bibliographies but also gives you greater ease and control in coordinating with your colleagues.

https://endnote.com

5. Mendeley

License: Free

Mendeley Desktop is free academic software (Windows, Mac, Linux) for organizing and sharing research papers and generating bibliographies with 1GB of free online storage to automatically back up and synchronize your library across desktop, web and mobile.

https://www.mendeley.com

6. Readcube

License: Commercial

ReadCube is a desktop and browser-based program for managing, annotating, and accessing academic research articles. It can sync your entire library including notes, lists, annotations, and even highlights across all of your devices including your desktop (Mac/PC), mobile devices (iOS/Android/Kindle), or even through the Web.

https://www.readcube.com

7. Qiqqa

License: Free

Qiqqa is a free research and reference manager. Its free version supports supercharged PDF management, annotation reports, expedition, Ad-supported, and 2GB free online storage.

http://www.qiqqa.com

8. Docear

License: Free

Docear offers a single-section user-interface that allows the most comprehensive organization of your literature; a literature suite concept that combines several tools in a single application (pdf management, reference management, mind mapping, …); A recommender system that helps you to discover new literature: Docear recommends papers which are free, in full-text, instantly to download, and tailored to your information needs.

http://www.docear.org

9. Paperpile

License: Commercial

Paperpile is a web-based commercial reference management software, with special emphasis on integration with Google Docs and Google Scholar. Parts of Paperpile are implemented as a Google Chrome browser extension

https://paperpile.com/

10. JabRef

License: Free

JabRef is an open-source bibliography reference manager. The native file format used by JabRef is BibTeX, the standard LaTeX bibliography format. JabRef is a desktop application and runs on the Java VM (version 8), and works equally well on Windows, Linux, and Mac OS X. Entries can be searched in external databases and BibTeX entries can be fetched from there. Example sources include arXiv, CiteseerX, Google Scholar, Medline, GVK, IEEEXplore, and Springer.

http://www.jabref.org/

10 Reference/Index Resources

1. Google Scholar

Google Scholar is a freely accessible web search engine that indexes the full text or metadata of scholarly literature across an array of publishing formats and disciplines. It includes most peer-reviewed online academic journals and books, conference papers, theses and dissertations, preprints, abstracts, technical reports, and other scholarly literature, including court opinions and patents.

2. arXiv

arXiv (pronounced 'archive') is a repository of electronic preprints (known as e-prints) approved for publication after moderation, that consists of scientific papers in the fields of mathematics, physics, astronomy, electrical engineering, computer science, quantitative biology, statistics, and quantitative finance, which can be accessed online. In many fields of mathematics and physics, almost all scientific papers are self-archived on the arXiv repository.

3. Springer

Springer Science+Business Media or Springer, part of Springer Nature, has published more than 2,900 journals and 290,000 books, which covers science, humanities, technical and medical, etc.

4. Hyper Articles en Ligne

Hyper Articles en Ligne (HAL) is an open archive where authors can deposit scholarly documents from all academic fields, run by the Centre pour la communication Scientifique direct, which is part of the French National Centre for Scientific Research. An uploaded document does not need to have been published or even to be intended for publication. It may be posted to HAL as long as its scientific content justifies it.

5. MEDLINE

MEDLINE (Medical Literature Analysis and Retrieval System Online, or MEDLARS Online) is a bibliographic database of life sciences and biomedical information. It includes bibliographic information for articles from academic journals covering medicine, nursing, pharmacy, dentistry, veterinary medicine, and health care. MEDLINE also covers much of the literature in biology and biochemistry, as well as fields such as molecular evolution.

Compiled by the United States National Library of Medicine (NLM), MEDLINE is freely available on the Internet and searchable via PubMed and NLM's National Center for Biotechnology Information's Entrez system.

6. ResearchGate

ResearchGate is a social networking site for scientists and researchers[3] to share papers, ask and answer questions, and find collaborators.[4] According to a study by Nature and an article in Times Higher Education, it is the largest academic social network in terms of active users.

7. CiteSeerx

Owner: Pennsylvania State University

CiteSeerx ( CiteSeer ) is a public search engine and digital library for scientific and academic papers, primarily in the fields of computer and information science. Many consider it to be the first academic paper search engine and the first automated citation indexing system. CiteSeer holds a United States patent # 6289342, titled 'Autonomous citation indexing and literature browsing using citation context”.

8. Scopus

Owner: Elsevier

Scopus is the world's largest abstract and citation database of peer-reviewed research literature. With over 22,000 titles from more than 5,000 international publishers. You can use this free author lookup to search for any author; or, use the Author Feedback Wizard to verify your Scopus Author Profile.

9. Emerald Group Publishing

Emerald Publishing was founded in 1967, and now manages a portfolio of nearly 300 journals, more than 2,500 books, and over 1,500 teaching cases, covering the fields of management, business, education, library studies, health care, and engineering.

10. Web of Science

Owner: Clarivate Analytics (United States)

Web of Science (previously known as Web of Knowledge) is an online subscription-based scientific citation indexing service originally produced by the Institute for Scientific Information (ISI)

10 Information Collection Tools: Survey & Web Data Collection Tools

1. Google Forms

Google Forms is a simple option for you if you already have a Google account. It supports menu search, a shuffle of questions for randomized order, limiting responses to once per person, custom themes, automatically generating answer suggestions when creating forms, and an 'Upload file' option for users answering to share content through.

Moreover, the response can be sync in Google Drive, users can request file uploads from individuals outside their respective company, with the storage cap initially set at 1 GB.

https://www.google.com/forms/about

2. Survey Monkey

Survey Monkey is quite a well-known name in the field but is also costing. It is a great choice for you if you want an easy user interface for basic surveys, as its free plan supports for unlimited surveys, however, each survey is limited to 10 questions.

https://www.surveymonkey.com

3. Survey Gizmo

SurveyGizmo can be customized to meet a wide range of data-collection demands. The free version has up to 25 question types, letting you write a survey that caters to specific needs. It also offers nearly 100 different question types that can all be customized to the user’s liking.

https://www.surveygizmo.com

4. PollDaddy

PollDaddy is online survey software that allows users to embed surveys on their website or inviting respondents via email. Its free version supports unlimited polls, 19 types of questions, and even adding images, videos, and content from YouTube, Flickr, Google Maps, and more.

https://polldaddy.com

5. LimeSurvey

LimeSurvey is an open-source survey software as a professional SaaS solution or as a self-hosted Community Edition. LimeSurvey's professional free version provides 25 responses/month with an unlimited number of surveys, unlimited administrators, and 10 MB upload storage.

https://www.limesurvey.org

Web Data Collection Tools

1. Octoparse

Octoparse is the most easy-to-use web scraping tool for people without a prior tech background. Its free version offers unlimited pages per crawl, 10 crawlers, and up to 10,000 records per export. If the data collected is over 10,000, then you can pay for $5.9 to export all the data. If you need to track the dynamic data in real-time, you may want to use Octoparse’s premium feature: scheduled cloud extraction.

Read its customer storiesto get an idea of how web scraping enhances businesses.

2. Parsehub

Parsehub is another non-programmer friendly desktop software for web scraping, which is available to various systems such as Windows, Mac OS X, and Linux. Its free version offers 200 pages per crawl, 5 public projects, and 14 days for data retention.

https://www.parsehub.com

3. Docparser

Docparser converts PDF documents into structured and easy-to-handle data, which allows you to extract specific data fields from PDFs and scanned documents, convert PDF to text, PDF to JSON, PDF to XML, convert PDF tables into CSV or Excel, etc. Its starting price is $19, which includes 100 parsing credits.

https://docparser.com

4. Scrapy

Scrapy is an open-source and collaborative framework for extracting the data you need from websites. In a fast, simple, yet extensible way.

https://scrapy.org

5. Feedity

Feedity automagically extracts relevant content & data from public webpages to create auto-updating RSS feeds. Instantly convert online news, articles, discussion forums, reviews, jobs, events, products, blogs, press releases, social media posts, or any other Web content into subscribable or publishable notifications. The starter version offers 20 feeds and 6 hours update interval, with a cost of $9 per month.

https://feedity.com

Author: Surie M.(Octoparse Team)

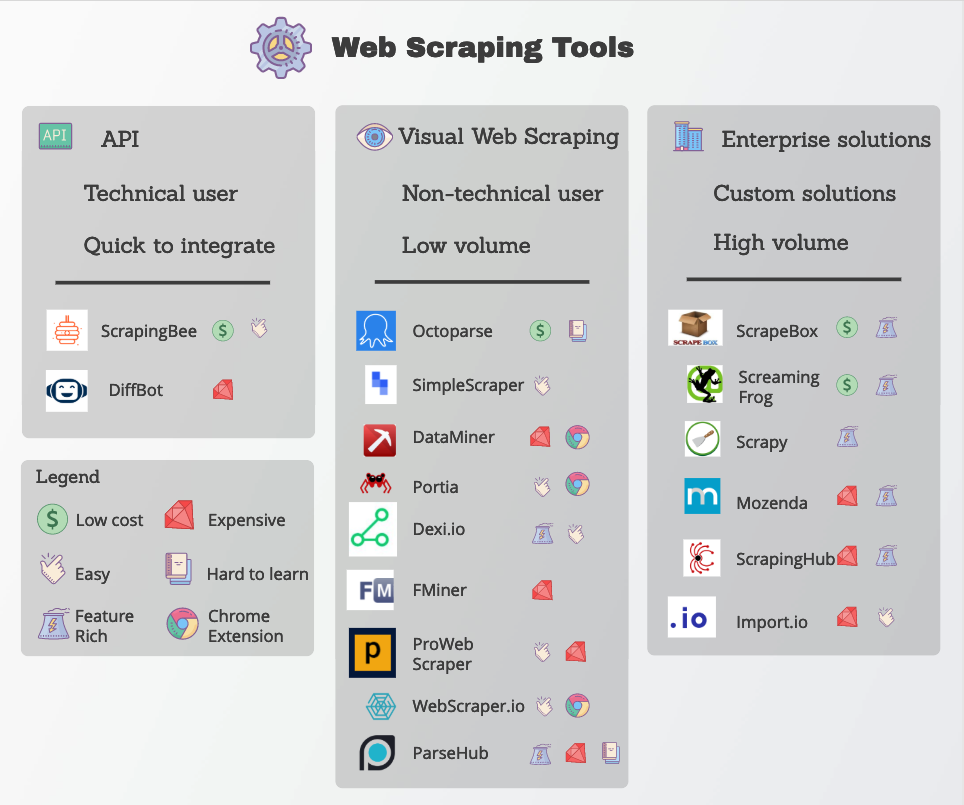

Monday, January 18, 2021Web scraping (also termed web data extraction, screen scraping, or web harvesting) is a technique of extracting data from the websites. It turns unstructured data into structured data that can be stored into your local computer or a database.

It can be difficult to build a web scraper for people who don’t know anything about coding. Luckily, there are tools available for people with or without programming skills. Also, if you're seeking a job for big data developers, using web scraper definitely raises your working effectiveness in data collection, improving your competitiveness. Here is our list of 30 most popular web scraping tools, ranging from open-source libraries to browser extension to desktop software.

Table of Content

1. Beautiful Soup

Who is this for: developers who are proficient at programming to build a web scraper/web crawler to crawl the websites.

Why you should use it: Beautiful Soup is an open-source Python library designed for web-scraping HTML and XML files. It is the top Python parsers that have been widely used. If you have programming skills, it works best when you combine this library with Python.

2. Octoparse

Who is this for: People without coding skills in many industries, including e-commerce, investment, cryptocurrency, marketing, real estate, etc. Enterprise with web scraping needs.

Why you should use it: Octoparse is free for life SaaS web data platform. You can use to scrape web data and turns unstructured or semi-structured data from websites into a structured data set. It also provides ready to use web scraping templates including Amazon, eBay, Twitter, BestBuy, and many others. Octoparse also provides web data service that helps customize scrapers based on your scraping needs.

3. Import.io

Who is this for: Enterprise looking for integration solution on web data.

Why you should use it: Import.io is a SaaS web data platform. It provides a web scraping solution that allows you to scrape data from websites and organize them into data sets. They can integrate the web data into analytic tools for sales and marketing to gain insight from.

4. Mozenda

Who is this for: Enterprise and business with scalable data needs.

Why you should use it: Mozenda provides a data extraction tool that makes it easy to capture content from the web. They also provide data visualization services. It eliminates the need to hire a data analyst.

5. Parsehub

Who is this for: Data analyst, Marketers, and researchers who lack programming skills.

Why you should use it: ParseHub is a visual web scraping tool to get data from the web. You can extract the data by clicking any fields on the website. It also has an IP rotation function that helps change your IP address when you encounter aggressive websites with anti-scraping techniques.

6. Crawlmonster

Who is this for: SEO and marketers

Why you should use it: CrawlMonster is a free web scraping tool. It enables you to scan websites and analyze your website content, source code, page status, etc.

7. ProWebScraper

Who is this for: Enterprise looking for integration solution on web data.

Why you should use it: Connotate has been working together with Import.io, which provides a solution for automating web data scraping. It provides web data service that helps you to scrape, collect and handle the data.

8. Common Crawl

Who is this for: Researchers, students, and professors.

Why you should use it: Common Crawl is founded by the idea of open source in the digital age. It provides open datasets of crawled websites. It contains raw web page data, extracted metadata, and text extractions.

9. Crawly

Who is this for: People with basic data requirements.

Why you should use it: Crawly provides automatic web scraping service that scrapes a website and turns unstructured data into structured formats like JSON and CSV. They can extract limited elements within seconds, which include Title Text, HTML, Comments, DateEntity Tags, Author, Image URLs, Videos, Publisher and country.

10. Content Grabber

Who is this for: Python developers who are proficient at programming.

Why you should use it: Content Grabber is a web scraping tool targeted at enterprises. You can create your own web scraping agents with its integrated 3rd party tools. It is very flexible in dealing with complex websites and data extraction.

11. Diffbot

Who is this for: Developers and business.

Why you should use it: Diffbot is a web scraping tool that uses machine learning and algorithms and public APIs for extracting data from web pages. You can use Diffbot to do competitor analysis, price monitoring, analyze consumer behaviors and many more.

Web Scraping Tools Open Source

12. Dexi.io

Who is this for: People with programming and scraping skills.

Why you should use it: Dexi.io is a browser-based web crawler. It provides three types of robots — Extractor, Crawler, and Pipes. PIPES has a Master robot feature where 1 robot can control multiple tasks. It supports many 3rd party services (captcha solvers, cloud storage, etc) which you can easily integrate into your robots.

13. DataScraping.co

Who is this for: Data analysts, Marketers, and researchers who're lack of programming skills.

Why you should use it: Data Scraping Studio is a free web scraping tool to harvest data from web pages, HTML, XML, and pdf. The desktop client is currently available for Windows only.

14. Easy Web Extract

Who is this for: Businesses with limited data needs, marketers, and researchers who lack programming skills.

Why you should use it: Easy Web Extract is a visual web scraping tool for business purposes. It can extract the content (text, URL, image, files) from web pages and transform results into multiple formats.

15. FMiner

Web Scraping Python

Who is this for: Data analyst, Marketers, and researchers who're lack of programming skills.

Why you should use it: FMiner is a web scraping software with a visual diagram designer, and it allows you to build a project with a macro recorder without coding. The advanced feature allows you to scrape from dynamic websites use Ajax and Javascript.

16. Scrapy

Who is this for: Python developers with programming and scraping skills

Why you should use it: Scrapy can be used to build a web scraper. What is great about this product is that it has an asynchronous networking library which allows you to move on to the next task before it finishes.

17. Helium Scraper

Who is this for: Data analysts, Marketers, and researchers who lack programming skills.

Why you should use it: Helium Scraper is a visual web data scraping tool that works pretty well especially on small elements on the website. It has a user-friendly point-and-click interface which makes it easier to use.

18. Scrape.it

Who is this for: People who need scalable data without coding.

Why you should use it: It allows scraped data to be stored on the local drive that you authorize. You can build a scraper using their Web Scraping Language (WSL), which is easy to learn and requires no coding. It is a good choice and worth a try if you are looking for a security-wise web scraping tool.

19. ScraperWiki

Who is this for: A Python and R data analysis environment. Ideal for economists, statisticians and data managers who are new to coding.

Why you should use it: ScraperWiki consists of 2 parts. One is QuickCode which is designed for economists, statisticians and data managers with knowledge of Python and R language. The second part is The Sensible Code Company which provides web data service to turn messy information into structured data.

20. Scrapinghub

Who is this for: Python/web scraping developers

Why you should use it: Scraping hub is a cloud-based web platform. It has four different types of tools — Scrapy Cloud, Portia, Crawlera, and Splash. It is great that Scrapinghub offers a collection of IP addresses covering more than 50 countries. This is a solution for IP banning problems.

21. Screen-Scraper

Who is this for: For businesses related to the auto, medical, financial and e-commerce industry.

Why you should use it: Screen Scraper is more convenient and basic compared to other web scraping tools like Octoparse. It has a steep learning curve for people without web scraping experience.

22. Salestools.io

Who is this for: Marketers and sales.

Why you should use it: Salestools.io is a web scraping tool that helps salespeople to gather data from professional network sites like LinkedIn, Angellist, Viadeo.

23. ScrapeHero

Who is this for: Investors, Hedge Funds, Market Analysts

Why you should use it: As an API provider, ScrapeHero enables you to turn websites into data. It provides customized web data services for businesses and enterprises.

24. UniPath

Who is this for: Bussiness in all sizes.

Why you should use it: UiPath is a robotic process automation software for free web scraping. It allows users to create, deploy and administer automation in business processes. It is a great option for business users since it helps you create rules for data management.

25. Web Content Extractor

Who is this for: Data analysts, Marketers, and researchers who're lack of programming skills.

Why you should use it:Web Content Extractor is an easy-to-use web scraping tool for individuals and enterprises. You can go to their website and try its 14-day free trial.

26. WebHarvy

Who is this for: Data analysts, Marketers, and researchers who lack programming skills.

Why you should use it: WebHarvy is a point-and-click web scraping tool. It’s designed for non-programmers. They provide helpful web scraping tutorials for beginners. However, the extractor doesn’t allow you to schedule your scraping projects.

27. Web Scraper.io

Who is this for: Data analysts, Marketers, and researchers who lack programming skills.

Why you should use it: Web Scraper is a chrome browser extension built for scraping data from websites. It’s a free web scraping tool for scraping dynamic web pages.

28. Web Sundew

Who is this for: Enterprises, marketers, and researchers.

Why you should use it: WebSundew is a visual scraping tool that works for structured web data scraping. The Enterprise edition allows you to run the scraping projects at a remote server and publish collected data through FTP.

29. Winautomation

Who is this for: Developers, business operation leaders, IT professionals

Why you should use it: Winautomation is a Windows web scraping tool that enables you to automate desktop and web-based tasks.

30. Web Robots

Who is this for: Data analysts, Marketers, and researchers who lack programming skills.

Why you should use it: Web Robots is a cloud-based web scraping platform for scraping dynamic Javascript-heavy websites. It has a web browser extension as well as desktop software, making it easy to scrape data from the websites.

Closing Thoughts

To extract data from websites with web scraping tools is a time-saving method, especially for those who don't have sufficient coding knowledge. There are many factors you should consider when choosing a proper tool to facilitate your web scraping, such as ease of use, API integration, cloud-based extraction, large-scale scraping, scheduling projects, etc. Web scraping software like Octoparse not only provides all the features I just mentioned but also provides data service for teams in all sizes - from start-ups to large enterprises. You can contact usfor more information on web scraping.

Data Scraping Tools

Ashley is a data enthusiast and passionate blogger with hands-on experience in web scraping. She focuses on capturing web data and analyzing in a way that empowers companies and businesses with actionable insights. Read her blog here to discover practical tips and applications on web data extraction 日本語記事:スクレイピングツール30選|初心者でもWebデータを抽出できる |